Robot Survelaince Implementation at Hyperscale Data Center

August 2020 - April 2021

Overview

For our senior capstone project, our team was sponsored by a local company to develop Boston Dynamic's Spot robot as a security "watch dog" for deployment in their facility. After overcoming a steep learning curve with this robot, I assisted in the facial recognition aspect of the robot. Using the 360 camera we implemented a body detection algorithm that would then determine where the main camera needed to focus to capture a higher quality image that yielded accurate facial recognition results. Facial Recognition was a key requirement by our sponsor to ensure only allowed patrons were in a given location.

My Contributions

Working with Spot was a steep learning curve and we all had to play a part in getting the basics of the

system up and running. At the start of the semester, one of my big contributions was working with both Boston

Dynamics and BYU's Office of Information Technology so that Spot could connect to Eduroam, a WPA2 Enterprise

Network. Since Spot was only publicly released a couple of months prior, it turned out no Spot had ever

attempted to connect to a WPA2 Enterprise network, we were the ones to discover the bug. One aspect of the

project that we loved was working with Boston Dynamics and this new product, we found bugs or would request

features that would then be released in the subsequent software updates. Although Boston Dynamics fixed the

issues on their end, we gave up on trying to dig through the red-tape and purchased our own local network that

we could connect Spot to, however this severely limited our range and didn't allow us to take Spot on a walk

across campus, it had to reside in the EB when running any of our code.

Besides being the network admin, another student and I were on the autonomy team as we learned how to generate

missions for Spot as well cycle through new missions depending on which area needed to be surveyed next.

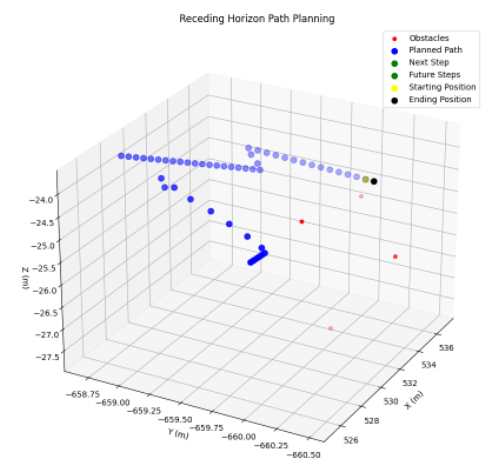

As the Camera team was having issues reliably running facial detection on patrons, I proposed and developed

the idea

to use the lower quality general purpose 360 camera to identify the location of patrons, and then feed in

their direction

to the higher quality camera that could pan, tilt and swivel. This solution decreased false negatives as well

as increased

facial recognition rate. Output from the 360 camera while running body recognition is shown below.

Body recognition image from live demo. Positions will be used to turn main camera and run facial detection

Things I Learned and Skills Developed

This project was my first exposure to industrial robotics and really opened my mind to different career opportunities. I realized I didn’t have to work for the manufacture of these robots, in this case Boston Dynamics, to work hands on with these robots, but that there’s plenty of deployment specific projects that require innovative ideas to accomplish them. As a capstone team we only provided a proof of concept, but our sponsor’s vision for it went far beyond what we imagined, and he’ll need experienced engineers to achieve that vision. For me, those are the jobs that interest me. I want to work on all aspects of a robotic system instead of being confined to a small sub team. This desire helped motivate me to get a PhD instead of a master’s degree. I also became a lot more familiar with Linux and interfacing via the terminal

Code

Code cannot be shared from this project