Robot Baseball Catcher

Feburary 2022

Overview

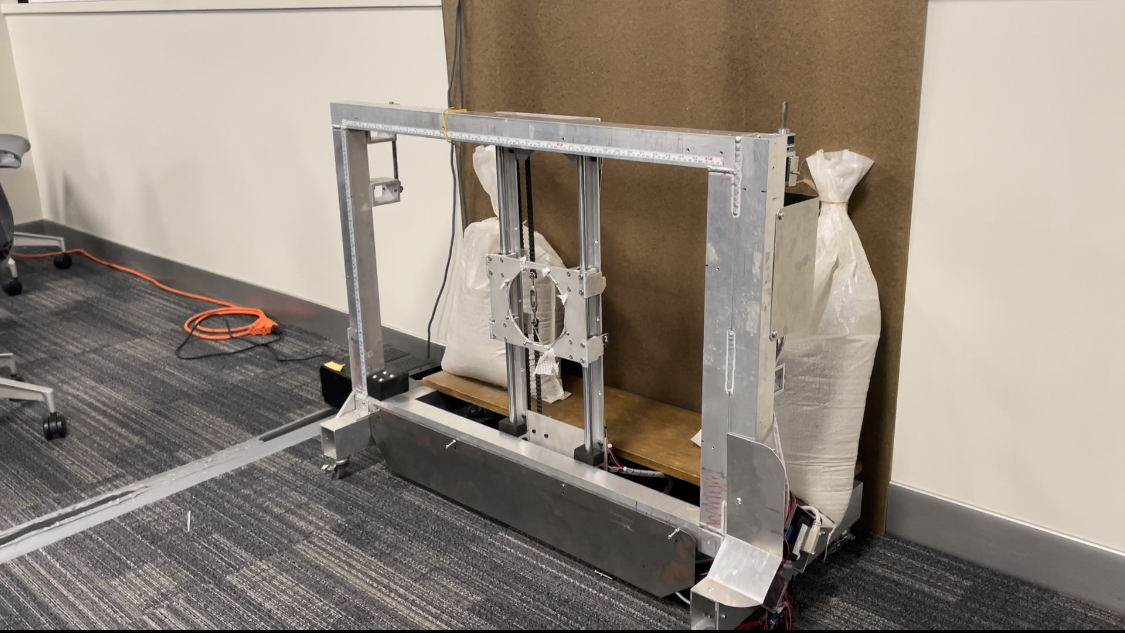

For this project we had a pitching machine on one end of the room and a stereo camera system on the

other above a catcher on a XY linear rail system. The machine would pitch a ball at 25mph across the

room, the stereo cameras would track the ball at 30 frames a second, estimating the ball’s

trajectory, then move the catcher to intercept. The goal was to catch 20 ‘catchable’ pitches in a

row,

and we were successful!

An uncatchable pitch would be outside the physical motion of the

catcher arm due to variability from the pitching machine.

My Contributions

For this project, as a team of 3 we gathered the test images together and calibrated the cameras

before diverging and each trying our own implementation of the test set. Due to high variability

between the pitching machine, the orientation of the cameras in respect to the catching machine, and

the uncertainty of the catching machine, we each developed our own code before integrating the best

parts into a working solution. The class was also organized in a way that we had to get initial

results on our own before working together as a team.

The flow of the code is as follows:

- For each image, left and right, I detected the location of the ball using the Hough Circles method. To speed up computation I used a variable region of interest because the starting location of the ball is known (where the pitching machine is).

- Using the location of the ball, I could calculate the disparity between the ball locations and extract the 3D information of the ball in real space in relation to the cameras.

- This would continue for 30 frames, after which it was time to move the catching machine before it was too late.

- A least squares line of best fit was used to calculate the final ball location.

- Final ball location would be converted into the coordinate frame of the catcher and the catcher would be commanded to move to that location.

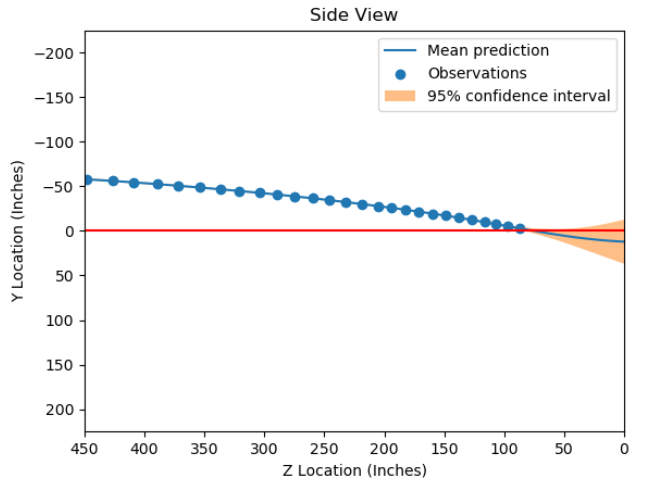

Since cameras are noisy sensors, I implemented a Kalman Filter to track the location of the ball in real time, however we found that it didn’t aid the implemented model at all. In our integrated project, instead of Hough Circles we used the Contour Area function to determine the location of the ball, this implementation proved to be less susceptible to noise. In addition to these, I decided to track the balls final location using Gaussian Process Regression to know what type of uncertainty we’d have with our final estimation. Although this was a fun thought exercise, it was unpractical due to the computational expense of GPR compared to a simple least squares method.

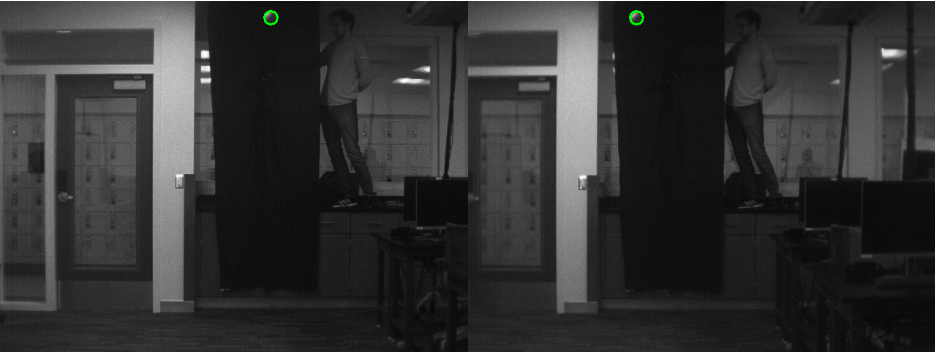

Ball detected at Frame 20 after pitch detection

GP estimation of ball location. For this pitch the ball is estimated to land 25.07 inches below the cameras and 0.837 inches to the left of the middle point between the cameras. detection

Things I Learned and Skills Developed

This project was one of my first introductions to real-time image processing. When writing code I had to be conscious of how computationally expensive each approach was because it would be called 30 times a second. I also learned and applied 3D point extraction from 2D images in a cool and fun project.

Code

Code will be shared at a later time