Monocular Visual Odometry

April 2022

Overview

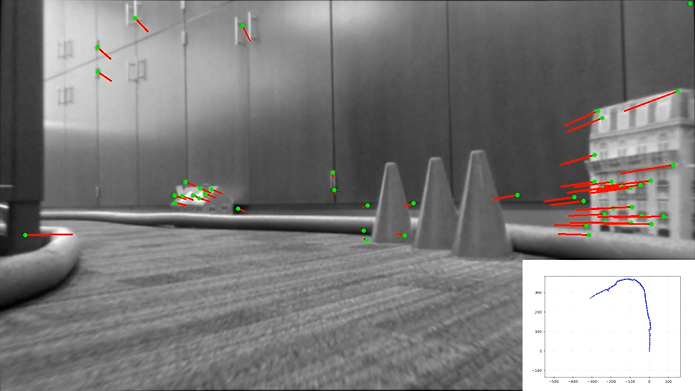

This is a simple monocular vision implementation done on an Intel Realsense D435 camera with no IMU or wheel odometry integration. The camera was mounted on the RC car used in the self-driving car project. Due to the limited power of the onboard Nvidia TX-1, there was no way this could be done online. Feature points were extracted using Sift features and matched using the K nearest neighbor algorithm. From these two frames of features points, the essential matrix was calculated and filtered using RANSAC. The new rotation and translation matrix were then added to calculate the new pose of the robot as it drove around the track.

My Contributions

I wrote this code from scratch. I tested my algorithm on the provided test set with established ground truth. When calculating the odometry of the car the only ground truth I had was that the car returned to where it started. If I had the time, I’m interested in running Visual Slam on my data set, but don’t expect to get around to that anytime soon.

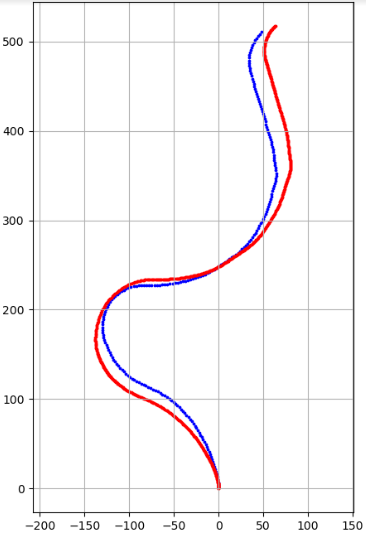

Training dataset. Red is ground truth, blue is estimated. As is common with all odometry implementations, the estimator tends to drift at corners.

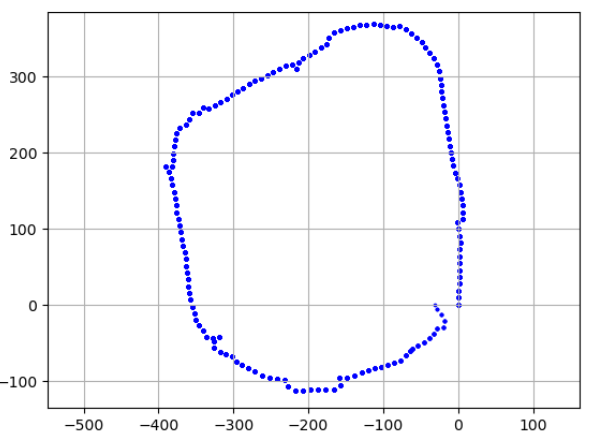

Track dataset, for this lap the car almost ended up at the same spot but as it rounded the corner it crashed into the left wall. Calculating visual odometry for this car was difficult because it would make jittery turning movements that cause the trajectory estimate to drift. If the car's turning had been smoother the estimate would not have suffered as much.

Things I learned and Skills Developed

I learned about some of the different approaches towards visual odometry and feature tracking between frames. My initial attempt at using optical flow, although fast, was more inaccurate compared to KNN with the sift features.

Code

Code will be linked later